Coding Assistance Case Study

There'll be time enough for counting when the dealing is done

I like coding assistants and I use them every day. They are equal parts amazing and boneheaded. There’s so many variables and so much nuance contributing to the boneheaded results, that I’m always looking for the straightforward use cases. The ones that should showcase the productivity enhancing power.

Recently, I had a what I thought to be the classic use case for a coding assistant to remove the tedium and do in seconds something that would take me minutes or hours.

Case study

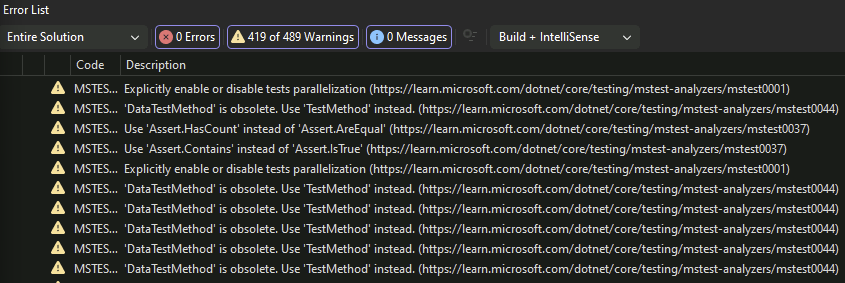

I recently upgraded my application MSTests to the newer Microsoft.Testing.Platform. This migration was done a few weeks back. But it left behind hundreds of analyzer warnings imploring me to adopt the new MSTest conventions.

Ripping through hundreds of tests and swapping out well defined implementations seemed like the perfect use case for a coding assistant.

Take 1

I started out in Visual Studio Copilot with Claude Sonnet 4.5. I instructed Copilot to find and correct all of the MSTest analyzer warnings.

Copilot did a reasonable job of compiling the solution and analyzing the build output to identify the MSTest warnings. It started to replace occurrences of [DataTestMethod] with [TestMethod], which is reasonable. But it got stuck processing for at least twenty minutes. It updated a few dozen occurrences, then declared victory. This was already off-track, because a simple find and replace would have accomplished this in seconds, yet Copilot processed for long durations, and missed a lot of them. I then instructed it to finish the rest. Copilot more or less engaged in the same process of long processing time, fixing a few warnings, and then giving up again declaring it was done. I tried this a few more times, prompting it to finish which it restarted. Eventually Copilot made a change that created a compiler error, and then went off the rails breaking and fixing unrelated things.

I eventually stopped it. This exercise took me a few hours and I didn’t really have anything useful to show for it.

Take 2

I decided to give Copilot CLI with GPT-5-mini a shot. The experience was different but similar. In the first attempt Copilot decided to suppress the warnings rather than fix them. After I rejected the warning suppression change, Copilot then performed like the Visual Studio attempt although it required much more interaction. Copilot stopped frequently requiring me intervene. It often declared it was done. Sometimes it claimed there were no more warnings several times in a row before eventually continuing.

I eventually stopped it. This exercise took me a few hours and I didn’t really have anything useful to show for it.

Take 3

I decided to try Rider with Junie for a completely different tooling perspective. The results were similar. In this case it did seem to successfully perform some edits, albeit extremely slowly, spending hours to get about 20% the way through. It did crash a few times, where I had to intervene and tell it to continue. But like the others, taking too long and needing to be periodically corrected, prompted to continue, or reminded that its not done was not helpful.

Summary

Needless to say these tools did not remove the tedium of a rudimentary task. In fact they failed miserably. Now one could argue it was too many files, or too vague an instruction (I should have told it to use find-replace for example), but isn’t this what its supposed to be good at? If it can’t do this, how much autonomy can we give these things?

Even if it took hours to complete, without requiring intervention, that would have had some value. I could have let it do its work and comeback at then end of the day to find it all done. But they couldn’t even do that.

I often feel like a gambler. When I get the good result in the morning, and the coding assistant does something impressive or makes things easier for me, I should take my winnings for the day and walk away from the table. If I play long enough, inevitably I lose my winnings.

I use Claude for writing and my experience is pretty much the same. Sometimes it manages to write a decent take on, say, estrogen metabolism. Sometimes it gets confused and writes about androgens instead. Prompts matter, but most of the time I have either to rewrite from scratch or to make so much edits that there is no difference in time spent. I don’t complain because Claude breaks my writer’s block and that’s great.